The latest edition of our Technology Trends special report once again underscores the significance of artificial intelligence (AI), which emerges as the undisputed focal point of this publication. This pre-eminent position reflects the extraordinary pace at which AI continues to expand its reach across the technology ecosystem, as evidenced by the emergence of new solutions—such as intelligent agents, AI-driven process orchestration and automation, advanced robotics, and AI-powered cloud services, among others. Taken together, these developments reinforce AI’s role as a strategic, organization-wide pillar in digital transformation initiatives worldwide.

However, the rapid evolution of AI contrasts with the challenge faced by many organizations in aligning its practical adoption with market expectations. As highlighted by the consulting firm McKinsey in The State of AI in 2025: Agents, Innovation, and Transformation, “While AI tools are now commonplace, most organizations have not yet embedded them deeply enough into their workflows and processes to realize material enterprise-level benefits.” Moreover, “For most organizations, AI use remains in pilot phases”. Indeed, nearly two-thirds of executives surveyed by the consultancy report that “they have not yet begun to scale their AI programs across their organizations”.

Beyond AI and the innovations arising from its convergence with other technologies, several additional trends are expected to shape the business landscape in 2026, most notably quantum computing. In this context, it is worth highlighting that the Massachusetts Institute of Technology (MIT) has launched an ambitious interdisciplinary initiative that applies quantum advances across science, technology, industry and national security. Known as QMIT, this initiative—physically based on the MIT campus—brings together researchers, students and companies interested in exploring high-impact quantum applications, to develop practical solutions in computing, sensors, networks, simulations, and algorithms.

Against this backdrop of continuous technological evolution, organizations assessing new digital transformation investments to enhance competitiveness, foster innovation, and promote sustainability are invited to consult this publication. Reviewed annually, it provides a comprehensive and expert analysis of the strategic technology trends with the greatest potential impact in 2026.

Technology trend 1: Cloud Services with AI

Technology trend 1: Cloud Services with AI

The adoption of AI embedded within cloud services is set to transform the way organizations manage information technology (IT) operations. This integration marks a shift whereby AI moves beyond a supporting role to become a core component, embedded across the entire spectrum—from infrastructure management to application deployment.

AI-powered cloud services refer to the integration of AI capabilities into cloud infrastructure and platforms, providing the technological foundation required to develop more intelligent applications and processes. According to Gartner, in AI-Enabling Cloud Services are the Future of Cloud, “by 2030, over 80% of enterprises will deploy industry-specific AI agents in support of critical business objectives, up from less than 10% today, and more than 60% will conduct intensive AI model activity across multiple clouds.”

Building on this technological foundation, AI-enabled industrial cloud solutions are emerging as a more specialized, sector-focused approach. These solutions combine AI, data and analytics to optimize mission-critical processes within specific industries. In essence, while AI-integrated cloud services deliver the underlying infrastructure and general-purpose capabilities, industrial cloud solutions apply these capabilities directly to concrete business processes and challenges.

Gartner also emphasizes the importance of optimizing the underlying infrastructure, i.e., the technological and computing resources required to run AI systems, including servers, software platforms, storage, and networks that support these workloads. According to the Stamford-based firm, organizations that fail to optimize their infrastructure “will pay over 50% more than those that do.”

This challenge is compounded by the energy demand associated with AI workloads, which, according to Gartner, “will more than triple by 2030, requiring a complete overhaul of data center power and cooling infrastructure.”

Another critical dimension of cloud strategies is “digital sovereignty”, understood as control over data, operations and technology. This aspect is gaining increasing prominence in light of geopolitical tensions and evolving regulatory frameworks. In this regard, Gartner recommends that organizations address digital sovereignty challenges by defining requirements across technical, operational, and data dimensions, evaluating hosting options, engaging with providers, and ensuring that cloud strategies remain sufficiently flexible to adapt to geopolitical and regulatory developments.

Technology trend 2: Service as a Product — the Modular Transformation of IT Services

Technology trend 2: Service as a Product — the Modular Transformation of IT Services

IDC estimates that by 2029, “30% of global IT services will be delivered as modular, platform-enabled products, driven by demand for speed, transparency, GEN-AI, and agentic AI-enabled autonomous service orchestration”. This projection, outlined in IDC FutureScape: Worldwide IT Industry 2026, indicates that this trend reflects a profound shift in the way services are designed, consumed and scaled, compelling providers to adopt product management practices and explore new commercial models, such as subscription-based offerings or outcome-based pricing.

Organizations are increasingly seeking outcome-oriented service engagements that reduce complexity and accelerate time to value. Modular service components, delivered through platforms, ensure consistent outcomes, enable flexible integration with other systems, and support governance at scale.

To capitalize on the opportunities presented by this technology trend, IDC recommends that CIOs assess provider maturity in terms of AI assurance, i.e., the ability to ensure that AI systems are trustworthy, secure, and compliant with regulations and standards, as well as the orchestration tools and integration capabilities they offer, to avoid fragmented delivery and minimize compliance risks.

For organizations that struggle to measure and realize the value of such investments, IDC advises the adoption of “robust value realization practices”. This entails establishing frameworks, processes, metrics and governance models to assess generated impact, monitor progress, and ensure that technology investments translate into tangible business outcomes.

The transition towards models in which services are conceived as products requires a thorough rethinking of delivery frameworks by IT services firms. Success depends on the ability to provide platform-enabled services that are modular, scalable, and supported by integrated governance mechanisms that ensure control, compliance and reliable operations, while incorporating industry-specific intellectual property, fostering collaborative innovation, and enabling adaptive service configurations.

IDC concludes that delivering services as products “will become a strategic imperative for both buyers and providers, navigating the next wave of AI-fueled business transformation.”

Technology trend 3: Agentic AI

Technology trend 3: Agentic AI

Agentic AI, also referred to as agent-based AI, has established itself as one of the leading technology trends in 2025 and is set to maintain a strong upward trajectory in 2026. This technology is grounded in autonomous systems capable of making decisions, setting objectives, and executing complex strategies without continuous human supervision. In doing so, agentic AI reshapes the interaction between people and machines and extends the scope for managing business operations by enabling systems to assume operational responsibilities independently.

Unlike traditional AI systems, which are largely confined to pattern recognition and predictive analytics, AI agents can understand context and adapt their strategies as situations evolve. Beyond merely responding to instructions, as conventional systems do, they identify opportunities, define goals, and coordinate the resources required to achieve them. Furthermore, these agents can operate collaboratively: each agent focuses on a specific task and, when combined, they form intelligent networks capable of addressing complex and dynamic problems.

AI agents represent a significant evolution in enterprise AI, as they pave the way for the transition “from a reactive tool to a proactive, goal-driven virtual collaborator.”, as outlined by McKinsey in Seizing the agentic AI advantage. As a result, agents offer a pathway to overcoming what the consultancy refers to as the “generative AI paradox”—a concept that explains why many organizations adopt cutting-edge AI technologies without seeing a noticeable impact on business performance or outcomes.

To address this paradox, McKinsey argues that AI agents have the potential to automate complex business processes by combining autonomy, planning, memory and integration. Thus, AI moves beyond a reactive role and becomes an autonomous virtual assistant with planning capabilities and a strong focus on delivering outcomes.

However, maximizing the potential of agentic AI requires more than embedding it into existing workflows—the set of sequential activities and tasks involving collaboration, information exchange and decision-making to achieve a specific objective. Organizations must go a step further and redesign these workflows from the ground up, positioning agents as central elements of the process.

With regard to enterprise adoption, according to McKinsey data from The state of AI in 2025: Agents, innovation, and transformation, 23% of organizations are currently scaling AI agents in at least one specific business area, while 39% remain in the experimentation phase. Despite this progress, adoption remains limited, as most deployments are concentrated in one or two areas, and in any given function, no more than 10% of organizations have scaled AI agents.

Technology trend 4: AI Process Automation

Technology trend 4: AI Process Automation

The so-called “AI paradox”, referred to in the previous section of this technology trends report, describes a situation in which many organizations adopt advanced AI technologies without achieving corresponding improvements in business performance or outcomes. This implies that, even where AI has been implemented, its transformative potential does not always translate into tangible benefits. McKinsey links this paradox to an imbalance between horizontal and vertical AI use cases. While horizontal use cases tend to be widely adopted, vertical use cases—those with the greatest potential for economic impact—often remain at early stages of development.

On the one hand, horizontal use cases, such as enterprise copilots and chatbots, have seen widespread adoption. A notable example is Microsoft 365 Copilot, which, according to McKinsey, is used by nearly 70% of Fortune 500 companies. These tools are primarily designed to enhance individual productivity by saving time on routine tasks and facilitating information management and synthesis. However, the benefits they generate are dispersed across the workforce, making it difficult for them to translate into measurable, organization-wide results.

On the other hand, vertical use cases, which are tied to specific business areas and concrete operational processes, have seen limited scaling in most companies. According to McKinsey, fewer than 10% of these initiatives progress beyond the pilot stage, and even when implemented, they tend to focus on automating isolated tasks within a process and operate reactively. As a result, their contribution to operational efficiency and overall business performance is limited, and they fail to realise the transformative potential that AI could offer at a structural level.

This imbalance between the two categories of use cases can be partly explained by the relative ease of implementing horizontal solutions, such as Microsoft Copilot or Google AI Workspace, which do not require workflow reconfiguration or significant organizational change management efforts. In addition, the rapid uptake of internal chatbots has been driven by the need to protect sensitive information and ensure compliance with corporate policies.

According to McKinsey, the real breakthrough occurs with the automation of complex workflows through AI agents, which extend the capabilities of traditional large language models (LLMs), moving beyond reactive content generation towards autonomous, goal-oriented task execution. Unlike standalone LLMs, which operate in isolation and lack contextual memory across sessions, these agents incorporate additional technological components that provide memory, planning, integration and orchestration capabilities. This enables them to understand objectives, break them down into subtasks, interact with systems and people, execute actions, and adapt in real time with minimal human intervention.

By combining these capabilities, general-purpose copilots evolve from reactive assistants into proactive collaborators, capable of monitoring dashboards, triggering workflows, tracking tasks and delivering relevant insights in real time. Nevertheless, the most significant impact is achieved at the vertical level, where agentic AI enables the automation of complex business processes involving multiple steps, stakeholders and systems, ultimately transforming AI into a strategic engine capable of delivering tangible and sustainable results.

Technology trend 5: Sustainable Technology

Technology trend 5: Sustainable Technology

Sustainable technology is a broad concept encompassing solutions designed to uphold principles of environmental protection and ensure the responsible use of resources, while minimizing ecological impact. Technologies within this domain enable companies and institutions to optimize processes, reduce their environmental footprint, and guide strategic planning in line with responsible management criteria.

By 2026, significant changes are expected to be driven by a range of initiatives across both technology services and the development of more environmentally responsible solutions. These efforts will directly shape how organizations plan, adopt, and manage technology.

- AI for sustainability: The article Artificial intelligence for sustainability, published in Discover Conservation and authored by Stockholm Environment Institute (SEI) researchers Julia Barrot and Matthew Fielding, examines the role of AI and Earth Observation (EO) technologies in sustainable forest management and climate change mitigation. Specifically, the combination of these two technologies enables the monitoring, mapping and interpretation of forest ecosystems across temporal and spatial scales that would be unattainable using traditional methods. Key applications include predicting and monitoring wildfires, assessing large-scale environmental change, detecting illegal logging, and planning sustainable forest management. Nevertheless, the combined use of AI and EO also entails certain challenges, including algorithmic bias, data quality limitations, lack of transparency, and inequalities in access to technology. To maximize benefits and mitigate risks, it is essential to establish ethical guidelines, robust governance mechanisms and human oversight, as well as to integrate traditional knowledge and promote international cooperation. Thus, the convergence of AI and EO emerges as a strategic asset for supporting forest conservation, protecting biodiversity and strengthening global climate resilience.

- Green computing and decarbonization. These two elements play a pivotal role in sustainability strategies. In pursuit of carbon emission reduction commitments, organizations are increasingly adopting energy-efficient technologies, such as sustainable data centers, alongside the implementation of the edge computing model, which optimizes energy use by processing data closer to its source, improving performance, reducing latency, and limiting data transfer to centralized infrastructures. As a result, organizations can lower their carbon footprint, optimize costs, and achieve a more sustainable operating model. According to IDC FutureScape: Worldwide IT Industry 2025 Predictions, “By 2026, 60% of enterprises will implement Sustainable AI Frameworks, leveraging data-driven decisions to scale AI operations across datacenter locations while meeting decarbonization goals.”

- Resource efficiency and circularity. Organizations are increasingly focused on optimizing resource use, reducing waste and extending product lifecycles through more sustainable design. Illustrative examples include Microsoft, which has exceeded its internal targets for server and component reuse and recycling, achieving a rate of 90.9%. Apple, in its annual environmental progress report, states that in 2025 it reduced global greenhouse gas emissions by more than 60%, aiming to achieve carbon neutrality across its environmental footprint by 2030. Similarly, Siemens has reduced its operational footprint by 66% since 2019 (excluding carbon credits), advancing towards its goal of cutting Scope 1 and Scope 2 emissions by 90% at the start of the new decade.

Technology trend 6: Quantum Computing

Technology trend 6: Quantum Computing

To understand quantum computing, it helps to look back and briefly review its history. This journey begins with the Physics of Computation conference organized by MIT and IBM in 1981, where physicist Richard Feynman delivered a landmark address—often cited for the following excerpt: “Nature is quantum, goddamn it! So, if we want to simulate it, we need a quantum computer.”

With this statement, the Nobel laureate highlighted that nature—and the universe as a whole—operates according to the laws of quantum mechanics. This implies that physical phenomena, relating to the behavior of subatomic particles, atoms, and molecules, cannot be accurately simulated using classical computers, which rely on binary, deterministic logic very different from the probabilistic logic of the quantum world. Consequently, to faithfully simulate the universe at a microscopic scale, it is necessary to employ computers that operate according to quantum principles such as superposition, entanglement, and interference.

Shortly after Feynman’s speech, various research lines were initiated, which during the 1990s led to the development of two algorithms demonstrating the practical applicability of quantum computing and marking the foundation for quantum computer development. These include universal quantum computers—such as IBM’s gate-based systems with 1,121 qubits—and specialized quantum annealing systems, such as D-Wave’s with 5,000 qubits.

In recent years, significant advances have been made in quantum algorithms and software, alongside intensified efforts to construct large-scale quantum computers, involving companies such as Google, Honeywell, Intel, Microsoft, Origin Quantum, QUDOOR, and ZTE, among others.

This historical context helps to clarify the distinction between classical and quantum computing. Traditional computing relies on bits, which can assume only two possible values, ‘0’ or ‘1’, to store and process information. In quantum computing, the basic unit is the qubit, which, according to quantum physics, can be in a state of ‘0’, ‘1’, or a superposition of both. This property endows quantum computing systems with a high-dimensional computational space, known as Hilbert space, in which 𝑛 qubits can represent 2^𝑛 possible values simultaneously.

In practice, quantum computing has the potential to transform numerous sectors. In pharmaceuticals, it can accelerate the discovery of new drugs through high-precision molecular simulation. In the energy sector, it can advance nuclear fusion research to produce clean, virtually limitless energy. It also offers the capacity to tackle previously intractable problems, such as simulating complex quantum systems or performing large-scale combinatorial optimization. Moreover, it paves the way for scientific breakthroughs in quantum mechanics and the development of derivative technologies, such as quantum cryptography.

However, large-scale quantum computing presents significant challenges. As described in the chapter «Quantum Computing: Vision and Challenges» from Quantum Computing: Principles and Paradigms (Morgan Kaufmann), the most difficult obstacle to mitigate is the decoherence of quantum states in which qubits are encoded. Decoherence occurs when qubits interact with their environment—including vibrations, microscopic movements of their supporting material, external electromagnetic fields, temperature fluctuations, radiation, subatomic particles, or inadequately isolated hardware components—leading to the loss of quantum properties and subsequent information degradation. This phenomenon constitutes a major barrier to building scalable quantum devices, as environmental noise is unavoidable even in highly controlled settings.

Noisy Intermediate-Scale Quantum (NISQ) devices represent an intermediate stage in the evolution of quantum computing. These systems have a limited number of qubits and are subject to errors and disturbances caused by decoherence. Despite these limitations, NISQ devices perform computations with available qubits while tolerating a certain amount of noise. Consequently, current efforts in quantum computing focus on two main objectives: reducing the probability of qubit decoherence and developing effective error-correction methods to compensate for information loss.

Beyond the challenge of decoherence, modern quantum devices also face the difficulty of efficiently designing and interconnecting qubits in a way that preserves entanglement. At present, qubit connectivity is limited, which restricts the execution of advanced algorithms that require many simultaneous interactions. This poses a major obstacle to implementing quantum algorithms and highlights the need for new architectures and entanglement strategies that support scalability and improved performance.

Finally, regarding the economic impact of the quantum computing market, Boston Consulting Group (BCG) notes in The Long-Term Forecast for Quantum Computing Still Looks Brigh that this trend “will create $450 billion to $850 billion of economic value—sustaining a $90 billion to $170 billion market for hardware and software providers—by 2040”.

Similarly, McKinsey’s Quantum Technology Monitor indicates that “the three core pillars of QT—quantum computing, quantum communication, and quantum sensing—could together generate up to $97 billion in revenue worldwide by 2035. Quantum computing will capture the bulk of that revenue, growing from $4 billion in revenue in 2024 to as much as $72 billion in 2035”.

Technology trend 7: Post-Quantum Cryptography

Technology trend 7: Post-Quantum Cryptography

The development of quantum computing, discussed in the previous section of this technology trends report, is closely linked to the forthcoming cryptographic shift. This advancement poses a threat to current encryption standards, such as RSA and ECC.

To appreciate the magnitude of this risk, it is important to remember that most current encryption schemes are based on mathematically complex problems that are computationally infeasible for classical computers to solve. However, it is only a matter of time before quantum computers can solve these problems within minutes, representing a significant security threat at both governmental and corporate levels.

According to Capgemini’s Trends in Cibersecurity report, while it remains difficult to predict precisely when quantum computers will be sufficiently advanced to jeopardize cybersecurity, expert projections for the cryptographic relevance of quantum computing (CRQC) fall between 2029 and 2035. It is estimated that within the next five years, quantum computers could compromise RSA encryption with a probability of 5–14%, increasing to 36–59% over the next decade.

In anticipation of these developments, the strategy known as “harvest now, decrypt later” is being employed by malicious actors. This involves collecting encrypted data in advance to decrypt it once quantum computing capabilities reach a sufficient level of maturity.

Concerns regarding security in the quantum era have prompted action at governmental, institutional, and corporate levels, including:

- The National Institute of Standards and Technology (NIST) in the United States has developed quantum-resistant, standardized algorithms, such as CRYSTALS-Kyber, CRYSTALS-Dilithium, SPHINCS+, and FALCON, and plans to continue developing new algorithms in the coming years.

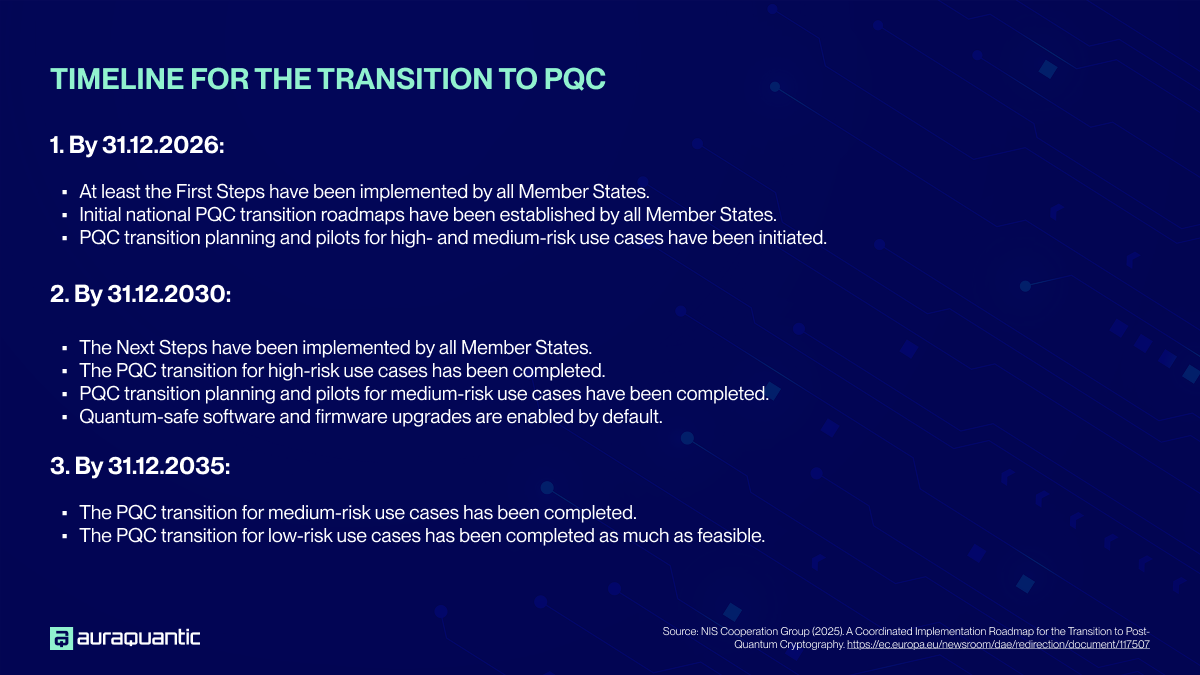

- The European Union (EU) has published a roadmap for a coordinated transition to post-quantum cryptography (PQC). According to this plan, all Member States are expected to implement initial steps and define national roadmaps by 2026, begin transition planning and pilot projects for medium- and high-risk cases; complete the transition for high-risk cases and finalize medium-risk pilots by 2030; and, as a final step, achieve full implementation—including low-risk cases—by 2035.

- At the corporate level, quantum computing also poses a threat to the security of sensitive data and communications. Current estimates indicate that the transition to PQC will be a lengthy process, requiring several years, as new algorithms require adaptations in both hardware and software infrastructure, in addition to increased computational resource requirements.

To structure the transition to PQC, this technology trends report references A Framework for Migrating to Post‑Quantum Cryptography: Security Dependency Analysis and Case Studies, supported by the Cyber Security Research Centre Ltd., funded under the Cooperative Research Centers Program of the Australian Government, and developed in collaboration with academic and cybersecurity experts.

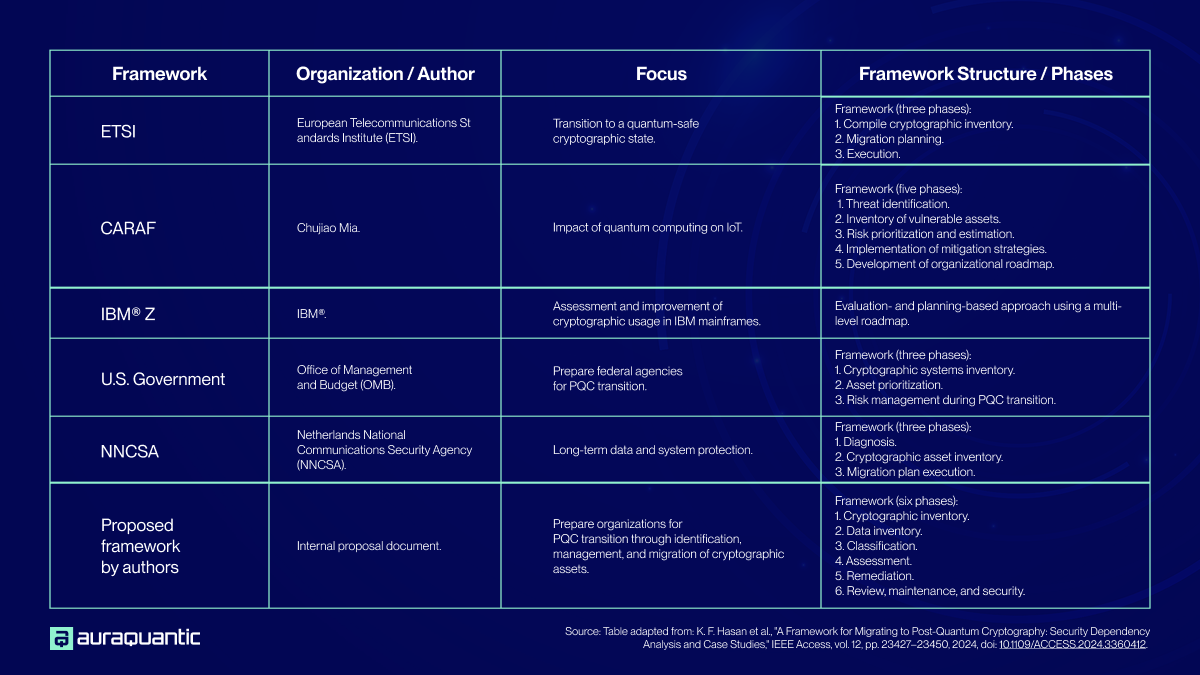

The study identifies five frameworks for approaching PQC migration, alongside a methodological proposal developed by the authors themselves. This proposal provides organizations with a structured guide to identify, manage, and migrate cryptographic assets, assess dependencies, plan the adoption of advanced algorithms, and execute a gradual, controlled, and secure transition to quantum-resistant cryptographic schemes.

In general terms, a PQC migration framework is defined as a systematic approach for replacing traditional cryptographic assets with quantum-resistant alternatives. This includes:

- Identification, classification, and recording of cryptographic assets, including cryptographic primitives, encryption keys, digital certificates, and related components.

- Comprehensive lifecycle management of these assets, covering generation, distribution, storage, rotation, and secure decommissioning.

The following section presents the reference frameworks analyzed in the study, along with their main phases of each proposal:

Technology trend 8: AI-Powered Physical Robots

Technology trend 8: AI-Powered Physical Robots

In 2025, robotics enters a decisive phase of evolution, driven by rapid technological advances and the convergence of AI, advanced sensors, and edge computing, as well as by the growth of open-source development and the increasing availability of cost-effective manufacturing components.

In line with this dynamic technological landscape, AI-driven robots have moved beyond experimental environments to become operational in a variety of business applications.

This new generation of robots represents a qualitative leap from traditional robotics, which focused on executing predefined tasks. This shift is driven by physical AI—AI systems designed to perceive, interpret, and act autonomously in the physical world. By combining sensory data, spatial understanding, and real-time decision-making, these systems can interact with three-dimensional environments governed by physical laws.

Unlike traditional AI, which operates exclusively within digital environments, physical AI enables machines to comprehend their surroundings, learn from experience, and adapt their behavior based on dynamic information.

The development of physical AI systems relies on technologies such as neural graphics, synthetic data generation, physics-based simulation, and advanced reasoning models. Additionally, training methods such as reinforcement learning and imitation allow these systems to acquire an understanding of essential physical principles and dynamics within virtual environments before being deployed in real-world settings.

Notable real-world applications include Intuitive Surgical’s da Vinci robotic system, which supports high-precision surgery and learns from clinical data to improve outcomes. In advanced robotics, the latest generation of Atlas, developed by Boston Dynamics, is a bipedal robot that uses AI to enhance movement and object manipulation. Tesla’s Optimus humanoid robot, still under development, builds on the company’s autonomous driving technology, incorporating machine-learning models for real-time vision, object recognition, and autonomous navigation.

Despite significant advances in AI-driven robotics, adoption still faces major challenges related to regulation, data management, and cybersecurity. In terms of its impact on the future of work, the most successful initiatives follow a collaborative human–machine model: robots automate repetitive and high-precision tasks, detect errors, and reduce workplace risks, while humans focus on supervision, decision-making, and complex problem-solving. Achieving this balance requires organizations to rethink roles, processes, and training programs to ensure effective human–robot collaboration.

Market projections reflect strong growth. KBV Research, in its report Artificial Intelligence Robots Market , estimates that the global AI robotics market will reach USD 37.9 billion by 2027, with a compound annual growth rate (CAGR) of 32.3% between 2021 and 2027. This outlook is reinforced by Statista, which projects the industrial AI robotics market will grow to USD 65.02 billion by 2030.